A changing world needs changing methods: six steps for evaluations to assess “what will work”

Climate change is creating unpredictable futures for sustainable development. Yet, evaluation methods for assessing development progress have remained unchanged. Emilie Beauchamp and Stefano D’Errico explain why evaluations must urgently turn from assessing 'what works' to 'what will work' and introduce a new guide to evaluation in a time of climate change.

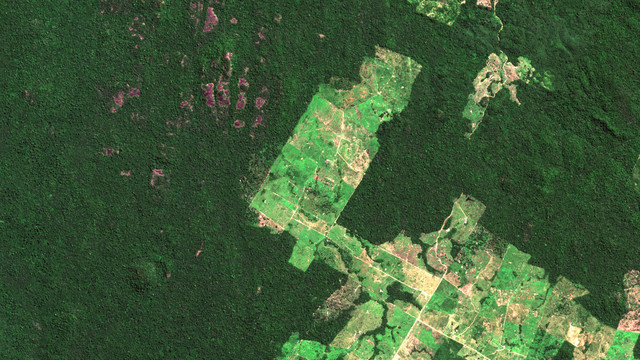

Climate change, with its unpredictable magnitude and frequency of impacts, has significant implications for how and what evidence is gathered to support sustainable development policies (Photo: Janice Corera/Oxfam via Flickr, CC BY-NC-ND 2.0)

The world has changed – evaluations have not.

Seven years after the adoption of the 2030 Agenda for Sustainable Development and the Paris Agreement, the world has changed dramatically. COVID-19 has hit economies and health systems around the world, while the biodiversity crisis is threatening the ecosystems upon which we all depend.

Climate change, with its increased and unpredictable magnitude and frequency of climatic impacts and shocks, poses radical challenges to sustainable development progress.

These changing circumstances have significant implications for how and what evidence is gathered to support sustainable development policies: solutions that have worked in the past may no longer be viable, nor can past trends be relevant to predict future progress in the face of increasing uncertainty.

Despite the changing contexts, evaluation approaches and methods used to develop evidence on sustainable development have largely remained the same. Evaluations don't take into account climate impacts that have occurred and are occurring, or consider future climate risks when assessing the effect of interventions and recommendations for future actions. In addition, many evaluations are still being undertaken in silos.

In short, current evaluative practices are ill-designed to provide the appropriate evidence or include all the relevant voices needed to address the climate crisis. This is compounded by the tendency for evaluations to be used retrospectively for reporting so that donors can be accountable to taxpayers, rather than as a process targeted towards learning, adaptive management and mutual accountability.

Six steps to design evaluations for uncertain futures

Evaluations have a critical role to play in responding to climate change by providing the relevant and sustainable bases for designing and adapting sustainable development interventions.

To support this, IIED has published a new practical guide for integrating climate risks into sustainable development evaluation. The guide presents six essential steps for evaluators and commissioners to move their evaluations from assessing 'what works' to 'what will work'. It also includes practical tools to help users integrate climate risks into their evaluation designs.

The guide is based on interviews with a dozen monitoring, evaluation and learning (MEL) experts and practitioners in national governments and specialist organisations, with a focus on developing countries. The publication incorporates learning from IIED’s extensive MEL work linked to the Sustainable Development Goals, national and devolved climate evaluation frameworks, biodiversity and life under water.

Evaluations must:

1. Identify appropriate evaluation criteria and principles

While many development evaluations use the criteria developed by the Organisation for Economic Co-operation and Development's Development Assistance Committee (OECD-DAC), evaluators must not shy away from using principles to complement and replace this set of six criteria. For example, users can use principles of equity or resilience grounded in the Paris Agreement or Agenda 2030.

This is important as evaluation criteria and principles represent the values by which the intervention will be judged, guiding the design of evaluation questions.

2. Identify which climate risks are relevant to the context of the intervention

Intervention failure can occur due to climate change risks affecting the intervention, or due to risks resulting from the intervention because it fails to adequately consider the climate change context. Users must identify relevant types of risk early during the evaluation design. This guide provides examples based on five types of risks.

3. Develop evaluation questions that consider how the evaluation criteria and principles relate to climate risks

Developing the right evaluation questions is critical to ensuring that your evaluation integrates climate risks and provides strong evidence for what will work. Users can do this by mapping climate risks with their selected criteria and principles and by introducing both predictive and retrospective perspectives in their questions.

4. Identify the data and data sources needed to carry out the evaluation

Using appropriate data sources is critical to addressing the complexity of sustainable development interventions. Evaluations must also investigate ethics and equity issues in selecting data sources.

We provide examples of data sources that are often neglected in evaluations, such as historical climate data, earth observation and big data, local and Indigenous knowledge, and perception and citizen science.

5. Implement good evaluation practices

The evaluation must follow key considerations that ensure that principles and criteria are embedded into activities and methodologies. Users must consider multi-scalar contexts, seek multi-stakeholder engagement, ensure local inclusion and co-creation and integration across systems.

6. Create learning pathways

Without clear learning strategies, evaluations can remain box-ticking exercises read by few, with little follow-up on recommendations. To avoid this, evaluations must design activities, materials and processes – and provide the accompanying budgets – to share findings with all stakeholders, not just donors.

This guide aims to support decision makers and practitioners across national governments and the international donors and civil society that support them.

We hope to revise this guide in future, updating it to include experiences and feedback from people undertaking evaluations in line with these recommendations. As such, we want to hear from users about their experience of using the guide and to develop a community of practice that will enhance learning for better evaluations. Get in touch!